CLI Web Spider: Difference between revisions

m (Стадий: 6 [Фаза:Утвърждаване, Статус:Утвърден]; Категория:Linux Server) |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<noinclude> | <noinclude>{{ContentArticleHeader/Linux_Server|toc=off}}{{ContentArticleHeader/Miscellaneous}}</noinclude> | ||

Here is a short manual that shows three ways how to create a simple ''web spider'' by using CLI on Linux/Ubuntu. | Here is a short manual that shows three ways how to create a simple ''web spider'' by using CLI on Linux/Ubuntu. The source of this article is the question of mine [https://askubuntu.com/questions/991447/how-do-i-create-a-cli-web-spider-that-uses-keywords-and-filters-content How do I create a CLI Web Spider that uses keywords and filters content?] at Ask Ubuntu. Before asking the question, I've read few topics as follow, but none of the solutions fits to my needs. | ||

*[https://askubuntu.com/questions/89931/web-spider-for-ubuntu Web spider for Ubuntu] | *[https://askubuntu.com/questions/89931/web-spider-for-ubuntu Web spider for Ubuntu] | ||

*[https://www.cyberciti.biz/tips/how-to-write-a-web-spider-on-linux-system.html How to write a Web spider on Linux system] | *[https://www.cyberciti.biz/tips/how-to-write-a-web-spider-on-linux-system.html How to write a Web spider on Linux system] | ||

| Line 248: | Line 248: | ||

filter_url_map | filter_url_map | ||

get_key_urls | get_key_urls | ||

</syntaxhighlight> | </syntaxhighlight><noinclude><div id='devStage'> | ||

<noinclude> | |||

<div id='devStage'> | |||

{{devStage | {{devStage | ||

| Прндл = Linux Server | | Прндл = Linux Server | ||

| Стадий = | | Прндл1 = Miscellaneous | ||

| Фаза = | | Стадий = 6 | ||

| Статус = | | Фаза = Утвърждаване | ||

| Статус = Утвърден | |||

| ИдтПт = Spas | | ИдтПт = Spas | ||

| РзбПт = {{REVISIONUSER}} | | РзбПт = Spas | ||

| АвтПт = Spas | |||

| УтвПт = {{REVISIONUSER}} | |||

| ИдтДт = 1.08.2022 | | ИдтДт = 1.08.2022 | ||

| РзбДт = {{Today}} | | РзбДт = 22.09.2022 | ||

| АвтДт = 22.09.2022 | |||

| УтвДт = {{Today}} | |||

| ИдтРв = [[Special:Permalink/29574|29574]] | | ИдтРв = [[Special:Permalink/29574|29574]] | ||

| РзбРв = {{REVISIONID}} | | РзбРв = [[Special:Permalink/31699|31699]] | ||

| АвтРв = [[Special:Permalink/31701|31701]] | |||

| УтвРв = {{REVISIONID}} | |||

}} | }} | ||

</div> | </div> | ||

</noinclude> | </noinclude> | ||

Latest revision as of 19:12, 22 September 2022

Here is a short manual that shows three ways how to create a simple web spider by using CLI on Linux/Ubuntu. The source of this article is the question of mine How do I create a CLI Web Spider that uses keywords and filters content? at Ask Ubuntu. Before asking the question, I've read few topics as follow, but none of the solutions fits to my needs.

The task: How to create a simple Web Spider in Ubuntu CLI

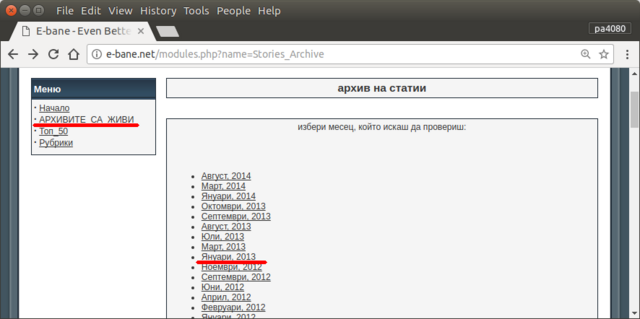

I want to find my articles within the deprecated (obsolete) literature forum e‑bane.net. Some of the forum modules are disabled, and I can't get a list of articles by their author. Also the site is not indexed by the search engines as Google, Yndex, etc.

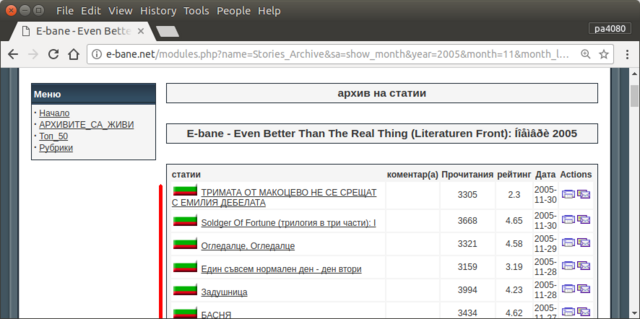

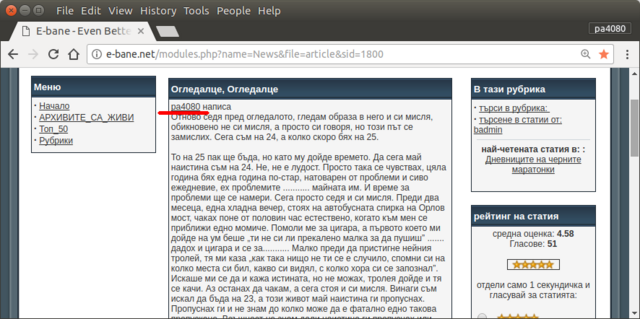

The only way to find all of my articles is to open the archive page of the site (Figure 1). Then I must select certain year and month – e.g. January 2013 (Figure 1). And then I must inspect each article (Figure 2) whether in the beginning is written my nickname – pa4080 (Figure 3). But there are few thousand articles.

Solution 1: Bash script that uses Wget

To solve this task I've created the next simple bash script that mainly uses the CLI tool wget.

#!/bin/bash

# Name: web-spider.wget.sh

TARGET_URL='<nowiki>http://e-bane.net/modules.php?name=Stories_Archive'</nowiki>

KEY_WORDS=('pa4080' 's0ther')

MAP_FILE='url.map'

OUT_FILE='url.list'

get_url_map() { # Use 'wget' as spider and output the result into a file (and stdout)

wget --spider --force-html -r -l2 "${TARGET_URL}" 2>&1 | grep '^--' | awk '{ print $3 }' | tee -a "$MAP_FILE"

}

filter_url_map() { # Apply some filters to the $MAP_FILE and keep only the URLs, that contain 'article&sid'

uniq "$MAP_FILE" | grep -v '\.\(css\|js\|png\|gif\|jpg\|txt\)$' | grep 'article&sid' | sort -u > "${MAP_FILE}.uniq && mv "${MAP_FILE}.uniq" "$MAP_FILE"

printf '\n# -----\nThe number of the pages to be scanned: %s\n' "$(cat "$MAP_FILE" | wc -l)"

}

get_key_urls() {

counter=1

while IFS= read -r URL; do # Do this for each line in the $MAP_FILE

for KEY_WORD in "${KEY_WORDS[@]}"; do # For each $KEY_WORD in $KEY_WORDS

if [[ ! -z "$(wget -qO- "${URL}" | grep -io "${KEY_WORD}" | head -n1)" ]]; then # Check if the $KEY_WORD exists within the content of the page,

echo "${URL}" | tee -a "$OUT_FILE" # if it is true echo the particular $URL into the $OUT_FILE

printf '%s\t%s\n' "${KEY_WORD}" "YES"

fi

done

printf 'Progress: %s\r' "$counter"; ((counter++))

done < "$MAP_FILE"

}

# Call the functions

get_url_map

filter_url_map

get_key_urls

The script has three functions:

- The first function

get_url_map()useswgetas–spider(which means that it will just check that pages are there) and will create recursive-rURL$MAP_FILEof the$TARGET_URLwith depth level-l2. (Another example could be found here: Convert Website to PDF). In the current case the$MAP_FILEcontains about 20 000 URLs.

- The second function

filter_url_map()will simplify the content of the$MAP_FILE. In this case we need only the lines (URLs) that contain the stringarticle&sidand they are about 3000. More Ideas could be found here: How to remove particular words from lines of a text file?

- The third function

get_key_urls()will usewget ‑qO-(as the commandcurl– examples) to output the content of each URL from the$MAP_FILEand will try to find any of the$KEY_WORDSwithin it. If any of the$KEY_WORDSis founded within the content of any particular URL, that URL will be saved in the$OUT_FILE.

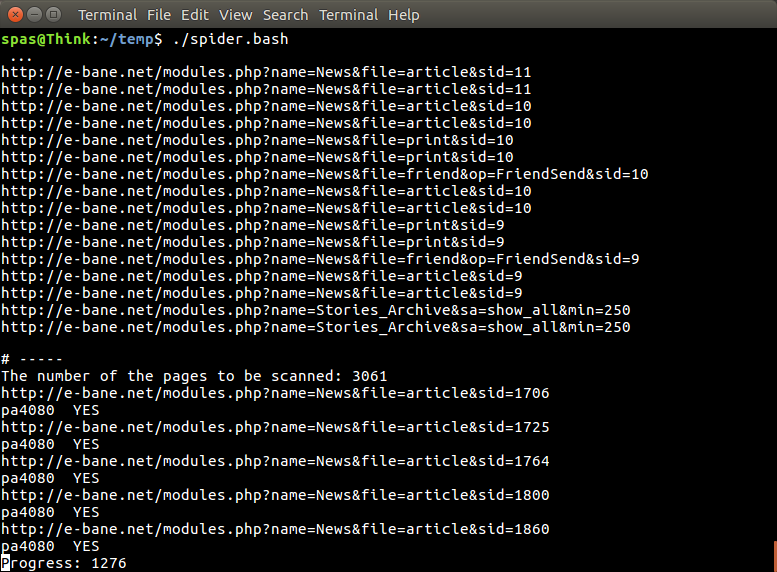

During the working process the output of the script looks as it is shown on Figure 4. It takes about 63 minutes to finish.

Solution 2: Python3 script

This solution is provided by @dan as answer on my question on AskUbuntu.

#!/usr/bin/python3

# Name: web-spirer.py

from urllib.parse import urljoin

import json

import bs4

import click

import aiohttp

import asyncio

import async_timeout

BASE_URL = 'http://e-bane.net'

async def fetch(session, url):

try:

with async_timeout.timeout(20):

async with session.get(url) as response:

return await response.text()

except asyncio.TimeoutError as e:

print('[{}]{}'.format('timeout error', url))

with async_timeout.timeout(20):

async with session.get(url) as response:

return await response.text()

async def get_result(user):

target_url = 'http://e-bane.net/modules.php?name=Stories_Archive'

res = []

async with aiohttp.ClientSession() as session:

html = await fetch(session, target_url)

html_soup = bs4.BeautifulSoup(html, 'html.parser')

date_module_links = parse_date_module_links(html_soup)

for dm_link in date_module_links:

html = await fetch(session, dm_link)

html_soup = bs4.BeautifulSoup(html, 'html.parser')

thread_links = parse_thread_links(html_soup)

print('[{}]{}'.format(len(thread_links), dm_link))

for t_link in thread_links:

thread_html = await fetch(session, t_link)

t_html_soup = bs4.BeautifulSoup(thread_html, 'html.parser')

if is_article_match(t_html_soup, user):

print('[v]{}'.format(t_link))

# to get main article, uncomment below code

# res.append(get_main_article(t_html_soup))

# code below is used to get thread link

res.append(t_link)

else:

print('[x]{}'.format(t_link))

return res

def parse_date_module_links(page):

a_tags = page.select('ul li a')

hrefs = a_tags = [x.get('href') for x in a_tags]

return [urljoin(BASE_URL, x) for x in hrefs]

def parse_thread_links(page):

a_tags = page.select('table table tr td > a')

hrefs = a_tags = [x.get('href') for x in a_tags]

# filter href with 'file=article'

valid_hrefs = [x for x in hrefs if 'file=article' in x]

return [urljoin(BASE_URL, x) for x in valid_hrefs]

def is_article_match(page, user):

main_article = get_main_article(page)

return main_article.text.startswith(user)

def get_main_article(page):

td_tags = page.select('table table td.row1')

td_tag = td_tags[4]

return td_tag

@click.command()

@click.argument('user')

@click.option('--output-filename', default='out.json', help='Output filename.')

def main(user, output_filename):

loop = asyncio.get_event_loop()

res = loop.run_until_complete(get_result(user))

# if you want to return main article, convert html soup into text

# text_res = [x.text for x in res]

# else just put res on text_res

text_res = res

with open(output_filename, 'w') as f:

json.dump(text_res, f)

if __name__ == '__main__':

main()

$ cat requirement.txt

aiohttp>=2.3.7

eautifulsoup4>=4.6.0

click>=6.7

Here is python3 version of the script (tested on python3.5 on Ubuntu 17.10).

How to use:

- To use it put both code in files. As example the code file is

script.pyand package file isrequirement.txt. - Run

pip install ‑r requirement.txt. - Run the script as example

python3 script.py pa4080

It uses several libraryes:

- click for argument parser

- beautifulsoup for html parser

- aiohttp for html downloader

Things to know to develop the program further (other than the doc of required package):

- python library: asyncio, json and urllib.parse

- css selectors (mdn web docs), also some html. see also how to use css selector on your browser such as this article

How it works:

- First I create a simple html downloader. It is modified version from the sample given on aiohttp doc.

- After that creating simple command line parser which accept username and output filename.

- Create a parser for thread links and main article. Using pdb and simple url manipulation should do the job.

- Combine the function and put the main article on json, so other program can process it later.

Some idea so it can be developed further

- Create another subcommand that accept date module link: it can be done by separating the method to parse the date module to its own function and combine it with new subcommand.

- Caching the date module link: create cache json file after getting threads link. so the program don't have to parse the link again. or even just cache the entire thread main article even if it doesn't match

This is not the most elegant answer, but I think it is better than using bash answer.

- It use Python, which mean it can be used cross platform.

- Simple installation, all required package can be installed using pip

- It can be developed further, more readable the program, easier it can be developed.

- It does the same job as the above bash script only for 13 minutes.

Solution 3: Bash script that uses Lynx

I've improved my script based on this answer provided by @karel. Now the script uses lynx instead of wget. In result it becomes significantly faster.

The current version does the same job for 15 minutes when there are two searched keywords and only 8 minutes if we searching for only one keyword. That is faster than the Python solution provided by @dan.

In addition lynx provides better handling of non Latin characters.

#!/bin/bash

# Name: web-spider.lynx.sh

TARGET_URL='http://e-bane.net/modules.php?name=Stories_Archive'

KEY_WORDS=('pa4080') # KEY_WORDS=('word' 'some short sentence')

MAP_FILE='url.map'

OUT_FILE='url.list'

get_url_map() {

# Use 'lynx' as spider and output the result into a file

lynx -dump "${TARGET_URL}" | awk '/http/{print $2}' | uniq -u > "$MAP_FILE"

while IFS= read -r target_url; do lynx -dump "${target_url}" | awk '/http/{print $2}' | uniq -u >> "${MAP_FILE}.full"; done < "$MAP_FILE"

mv "${MAP_FILE}.full" "$MAP_FILE"

}

filter_url_map() {

# Apply some filters to the $MAP_FILE and keep only the URLs, that contain 'article&sid'

uniq "$MAP_FILE" | grep -v '\.\(css\|js\|png\|gif\|jpg\|txt\)$' | grep 'article&sid' | sort -u > "${MAP_FILE}.uniq"

mv "${MAP_FILE}.uniq" "$MAP_FILE"

printf '\n# -----\nThe number of the pages to be scanned: %s\n' "$(cat "$MAP_FILE" | wc -l)"

}

get_key_urls() {

counter=1

# Do this for each line in the $MAP_FILE

while IFS= read -r URL; do

# For each $KEY_WORD in $KEY_WORDS

for KEY_WORD in "${KEY_WORDS[@]}"; do

# Check if the $KEY_WORD exists within the content of the page, if it is true echo the particular $URL into the $OUT_FILE

if [[ ! -z "$(lynx -dump -nolist "${URL}" | grep -io "${KEY_WORD}" | head -n1)" ]]; then

echo "${URL}" | tee -a "$OUT_FILE"

printf '%s\t%s\n' "${KEY_WORD}" "YES"

fi

done

printf 'Progress: %s\r' "$counter"; ((counter++))

done < "$MAP_FILE"

}

# Call the functions

get_url_map

filter_url_map

get_key_urls