LVM Basic Operations: Difference between revisions

mNo edit summary |

mNo edit summary |

||

| Line 1: | Line 1: | ||

<noinclude>{{ContentArticleHeader/Linux_Server|toc=off}}{{ContentArticleHeader/Linux_Desktop}}</noinclude> | <noinclude>{{ContentArticleHeader/Linux_Server|toc=off}}{{ContentArticleHeader/Linux_Desktop}}</noinclude> | ||

== Overview of logical volume management (LVM) == | |||

* '''''Source: [https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/9/html/configuring_and_managing_logical_volumes/overview-of-logical-volume-management_configuring-and-managing-logical-volumes Red Hat Docs > <nowiki>[9]</nowiki> > Configuring and managing logical volumes > Chapter 1]''''' | |||

Logical volume management (LVM) creates a layer of abstraction over physical storage, which helps you to create logical storage volumes. This provides much greater flexibility in a number of ways than using physical storage directly. | |||

In addition, the hardware storage configuration is hidden from the software so it can be resized and moved without stopping applications or unmounting file systems. This can reduce operational costs. | |||

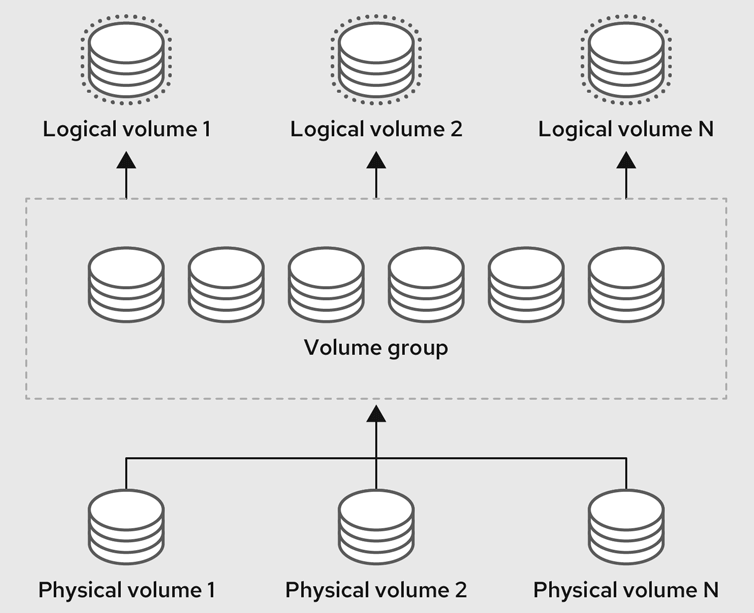

The following are the components of LVM - they are illustrated on the diagram shown at {{Media-cite|f|1}}: | |||

* '''Physical volume''': A physical volume (PV) is a partition or whole disk designated for LVM use. For more information, see [https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/9/html/configuring_and_managing_logical_volumes/managing-lvm-physical-volumes_configuring-and-managing-logical-volumes Managing LVM physical volumes]. | |||

* '''Volume group''': A volume group (VG) is a collection of physical volumes (PVs), which creates a pool of disk space out of which logical volumes can be allocated. For more information, see [https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/9/html/configuring_and_managing_logical_volumes/managing-lvm-volume-groups_configuring-and-managing-logical-volumes Managing LVM volume groups]. | |||

* '''Logical volume''': A logical volume represents a mountable storage device. For more information, see [https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/9/html/configuring_and_managing_logical_volumes/managing-lvm-logical-volumes_configuring-and-managing-logical-volumes Managing LVM logical volumes]. | |||

{{Media | |||

| n = 1 | |||

| img = Basic-lvm-volume-components-redhat-docs.png | |||

| sz = 1200 | |||

| pos = center | |||

| label = f | |||

}} | |||

== Physical volumes (PV) Display == | == Physical volumes (PV) Display == | ||

Revision as of 11:29, 12 September 2022

Overview of logical volume management (LVM)

Logical volume management (LVM) creates a layer of abstraction over physical storage, which helps you to create logical storage volumes. This provides much greater flexibility in a number of ways than using physical storage directly.

In addition, the hardware storage configuration is hidden from the software so it can be resized and moved without stopping applications or unmounting file systems. This can reduce operational costs.

The following are the components of LVM – they are illustrated on the diagram shown at Figure 1:

- Physical volume: A physical volume (PV) is a partition or whole disk designated for LVM use. For more information, see Managing LVM physical volumes.

- Volume group: A volume group (VG) is a collection of physical volumes (PVs), which creates a pool of disk space out of which logical volumes can be allocated. For more information, see Managing LVM volume groups.

- Logical volume: A logical volume represents a mountable storage device. For more information, see Managing LVM logical volumes.

Physical volumes (PV) Display

pvdisplay – Display various attributes of physical volume(s). pvdisplay shows the attributes of PVs, like size, physical extent size, space used for the VG descriptor area, etc.

sudo pvdisplay

--- Physical volume ---

PV Name /dev/nvme0n1p3

VG Name vg_name

PV Size <930.54 GiB / not usable 4.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 238216

Free PE 155272

Allocated PE 82944

PV UUID BKK3Cm-CAzY-rNah-o3Ox-L7FY-aNIY-ysUSIL

pvs – Display information about physical volumes. pvs is a preferred alternative of pvdisplay that shows the same information and more, using a more compact and configurable output format.

sudo pvs

PV VG Fmt Attr PSize PFree

/dev/nvme0n1p3 vg_name lvm2 a-- 930.53g 626.53g

# `lvdisplay` - Display information about a logical volume. Shows the attributes of LVs, like size, read/write status, snapshot information, etc.

# `lvs` is a preferred alternative that shows the same information and more, using a more compact and configurable output format.

## Extend VG which is located on /dev/sda to encompass also /dev/sdb

lsblk # check the devices

sudo pvcreat /dev/sdb # create phisical volume at /dev/sdb

sudo pvdisplay # check

sudo vgextend vg_name /dev/sdb # extend the existing volume group

df -hT # check

sudo vgdisplay # check

# At this point you can create a new logical volume or extend an existing one

## Extend logical volume - note there mus have enoug free space in the volume group where the existing LV is located

sudo lvextend -L +10G /dev/mapper/lv_name

sudo rsize2fs /dev/mapper/lv_name

df -h

### for swap file sudo mkswap /dev/mapper/xxxx-swap_1

### then edit /etc/fstab and chenge the UUID if it is mounted by it...

## Exten to all available space and resize the FS in the same time

sudo lvextend --reziefs -l +100%FREE /dev/mapper/lv_name

df -h

# Shrink logical volume

1 lsblk

2 sudo apt update

3 sudo apt install gnome-disk-utility

4 ll

5 lsblk

6 ll /dev/kali-x-vg

7 ll /dev/kali-x-vg/root

8 ll /dev/dm-0

9 sudo lvreduce --resizefs -L 60G kali-x-vg/root

10 lsblk

11 df -h

## Create a new volume group on newly attached block device /dev/sdc

lsblk # check

sudo pvcreat /dev/sdbc # crrate phisical volume

sudo vgcreate vg_name /dev/sdbc # create volume group

sudo vgdisplay # check

## Create a new logical volume

sudo lvcreate vg_name -L 5G -n lv_name # create 5GB logical volume

sudo lvdisplay # check

sudo mkfs.ext4 /dev/mapper/vg_name-lv_name # format the logical folume as Ext4

# mount the new logical volume

sudo mkdir -p /mnt/volume # create mount point

sudo mount /dev/mapper/vg_name-lv_name /mnt/volume # mount the volume

df -h # check

# mount it permanently via /etc/fstab (we can munt it by using the mapped path, but it is preferable to use UUID)

sudo blkid /dev/mapper/vg_name-lv_name # get the UUID (universal unique identifier) of the logical volume

> /dev/mapper/vg_name-lv_name: UUID="b6ddc49d-...-...c90" BLOCK_SIZE="4096" TYPE="ext4"

sudo cp /etc/fstab{,.bak} # backup the fstab file

sudo umount /mnt/volume # unmount the nel volume

df -h # check

sudo nano /etc/fstab

># <file system> <mount point> <type> <options> <dump> <pass>

> UUID=b6ddc49d-...-...c90 /mnt/volume ext4 defaults 0 2

# Test /etc/fsstab for errors, by remount everything listed inside

sudo mount -a # no output means everything is correctly mounted

df -h # check

Snapshots of Logical Volumes

Create a Snapshot

lvcreate – Create a logical volume.

-s,--snapshot– Create a snapshot. Snapshots provide a "frozen image" of an origin LV. The snapshot LV can be used, e.g. for backups, while the origin LV continues to be used. This option can create a COW (copy on write) snapshot, or a thin snapshot (in a thin pool.) Thin snapshots are created when the origin is a thin LV and the size option is NOT specified.-n,--name– Specifies the name of a new LV. When unspecified, a default name oflvol#is generated, where#is a number generated by LVM.

Create 5GB snapshot of a logical volume named lv_name from volume group named vg_name.

sudo lvcreate /dev/mapper/vg_name-lv_name -L 5G -s -n lv_name_ss_at_date_$(date +%y%m%d)

sudo lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

lv_name vg_name owi-aos--- 60.00g

lv_name_ss_at_date_220908 vg_name swi-a-s--- 5.00g lv_name 0.01

Mount a Snapshot

The snapshot could be mounted in order to fetch files in their snapshot state. In this case it is good to mount them in read only mode.

sudo mkdir /mnt/snapshot

sudo mount -r /dev/mapper/vg_name-lv_name_ss_at_date_220908 /mnt/snapshot

You can use a mounted snapshot (of the root filesystem) to create a backup while the OS is running.

Restore a Snapshot

Note after the restoring of a snapshot with merge option in use the snapshot will be removed, so you must create another snapshot for a later use of the same state of the logical volume.

For restoring a snapshot we will need to use the commands lvconvert and lvchange.

LvConvert: Convert-merge the snapshot with the source LV

lvconvert – Change logical volume layout.

--merge– An alias for--mergethin,--mergemirrors, or--mergesnapshot, depending on the type of LV (logical volume).--mergesnapshot– Merge COW snapshot LV into its origin. When merging a snapshot, if both the origin and snapshot LVs are not open, the merge will start immediately. Otherwise, the merge will start the first time either the origin or snapshot LV are activated and both are closed. Merging a snapshot into an origin that cannot be closed, for example a root filesystem, is deferred until the next time the origin volume is activated. When merging starts, the resulting LV will have the origin's name, minor number and UUID. While the merge is in progress, reads or writes to the origin appear as being directed to the snapshot being merged. When the merge finishes, the merged snapshot is removed. Multiple snapshots may be specified on the command line or a @tag may be used to specify multiple snapshots be merged to their respective origin.

sudo umount /lv_name-mountpoint

sudo lvconvert --merge /dev/mapper/vg_name-lv_name_ss_at_date_220908

If it is a root filesystem, and the source logical volume is not operational at all, we need to boot in live Linux session in order to perform the steps, because it must be unmounted. It it is not a root filesystem, we need to unmount the logical volume first.

LvChange: Deactivate, reactivate and remount the LV

lvchange – Change the attributes of logical volume(s).

-a|,--activate y|n|ay– Change the active state of LVs. An active LV can be used through a block device, allowing data on the LV to be accessed.ymakes LVs active, or available.nmakes LVs inactive, or unavailable…

sudo lvchange -an /dev/mapper/vg_name-lv_name

sudo lvchange -ay /dev/mapper/vg_name-lv_name

If we are working within a live Linux session – i.e. we are restoring a root filesystem, – these steps will be performed at the next reboot. Otherwise we need to run the following steps in order to apply the changes without reboot.

sudo mount -a # remount via /etc/fstab

df -h # list the mounted devices

Remove a Snapshot

lvremove – Remove logical volume(s) from the system.

sudo lvremove /dev/vg_name/lv_name_ss_at_date_220908

sudo lvremove /dev/mapper/vg_name-lv_name_ss_at_date_220908

References

- Red Hat Docs: Red Hat > [9] > Configuring and managing logical volumes

- Learn Linux TV: Linux Logical Volume Manager (LVM) Deep Dive Tutorial

- Learn Linux TV at YouTube: Linux Logical Volume Manager (LVM) Deep Dive Tutorial

- Linux Hint: LVM: How to List and Selectively Remove Snapshots

Assuming we want to create LVM and we want to occupy the entire disk space at /dev/sdb. You can fdisk or gdisk to create a new GPT partition table that will wipe all partitions and create new partitions you need:

- If the device

/dev/sdbwill be used used as boot device you can create two partitions one for/boot– Linux ext4 and one for the root fs/– Linux LVM. - If the device

/dev/sdbwon't be used as boot device you can create only one for LVM.

Create partition table:

sudo fdisk /dev/sdb

# create a new empty GPT partition table

Command (m for help): g

# add a new partition

Command (m for help): n

Partition number (1-128, default 1): 1

First sector (2048-488397134, default 2048):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (2048-488397134, default 488397134): +1G

Created a new partition 1 of type 'Linux filesystem' and of size 1 GiB.

# add a new partition

Command (m for help): n

Partition number (2-128, default 2):

First sector (2099200-488397134, default 2099200):

Last sector, +/-sectors or +/-size{K,M,G,T,P} (2099200-488397134, default 488397134):

Created a new partition 2 of type 'Linux filesystem' and of size 231.9 GiB.

# change a partition type

Command (m for help): t

Partition number (1,2, default 2): 2

Partition type (type L to list all types): 31

Changed type of partition 'Linux filesystem' to 'Linux LVM'.

# print the partition table

Command (m for help): p

Disk /dev/sdb: 232.91 GiB, 250059350016 bytes, 488397168 sectors

Disk model: CT250MX500SSD1

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 005499CA-7834-434B-9C36-5306537C8CF1

Device Start End Sectors Size Type

/dev/sdb1 2048 2099199 2097152 1G Linux filesystem

/dev/sdb2 2099200 488397134 486297935 231.9G Linux LVM

Filesystem/RAID signature on partition 1 will be wiped.

# verify the partition table

Command (m for help): v

No errors detected.

Header version: 1.0

Using 2 out of 128 partitions.

A total of 0 free sectors is available in 0 segments (the largest is (null)).

# write table to disk and exit

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

Format the first partition /dev/sdb1 to ext4:

sudo mkfs.ext4 /dev/sdb1

mke2fs 1.45.5 (07-Jan-2020)

Creating filesystem with 262144 4k blocks and 65536 inodes

Filesystem UUID: a6a72cfe-46f1-4caa-b114-6bf03f1efe7f

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Creating journal (8192 blocks): done

Writing superblocks and filesystem accounting information: done

Create LVM physicals volume at the second partition /dev/sdb1:

sudo pvcreate /dev/sdb2

Physical volume "/dev/sdb2" successfully created.

Create LVM volume group at /dev/sdb1:

sudo vgcreate lvm-vm-group /dev/sdb2

Physical volume "/dev/sdb2" successfully created.

lvm-vm-groupis the name of the group, it is mater of your choice.

Create LVM logical volume at lvm-vm-group:

sudo lvcreate -n vm-win-01 -L 60g lvm-vm-group

Logical volume "vm-win-01" created.

vm-win-01is the name of the logical device, it is mater of your choice.

Check the result:

lsblk | grep -P 'sdb|lvm'

├─sdb1 8:17 0 1G 0 part

└─sdb2 8:18 0 231.9G 0 part

└─lvm--vm--group-vm--win--01 253:0 0 60G 0 lvm

References:

- DigitalOcean: How To Use LVM To Manage Storage Devices on Ubuntu 18.04

- Ubuntu Community Wiki: Ubuntu Desktop LVM

- SleepLessBeastie's notes: How to fix device excluded by a filter