PVE Adopt a Native Ubuntu Server

In this article are documented the steps that I've performed to convert a native installation of Ubuntu Server 20.04 (installed on a physical SSD drive) to a Proxmox Virtual Machine. The article is divided into two main parts. The firs part encompass the topic "how to adopt the server by running the OS from the existing SSD installation". In the second part is described "how to convert the physical installation to a ProxmoxVE LVM Image".

Part 1: Adopt the Physical Installation

In this part we will attach one SSD and three HDDs to a Proxmox Virtual machine. The operating system is installed on the SSD, se we will attach this drive first.

Create a Proxmox VM

Within the references below are provided few resources which explain "how to create a virtual machine capable to run Linux OS within ProxmoxVE". So the entire process is not covered here.

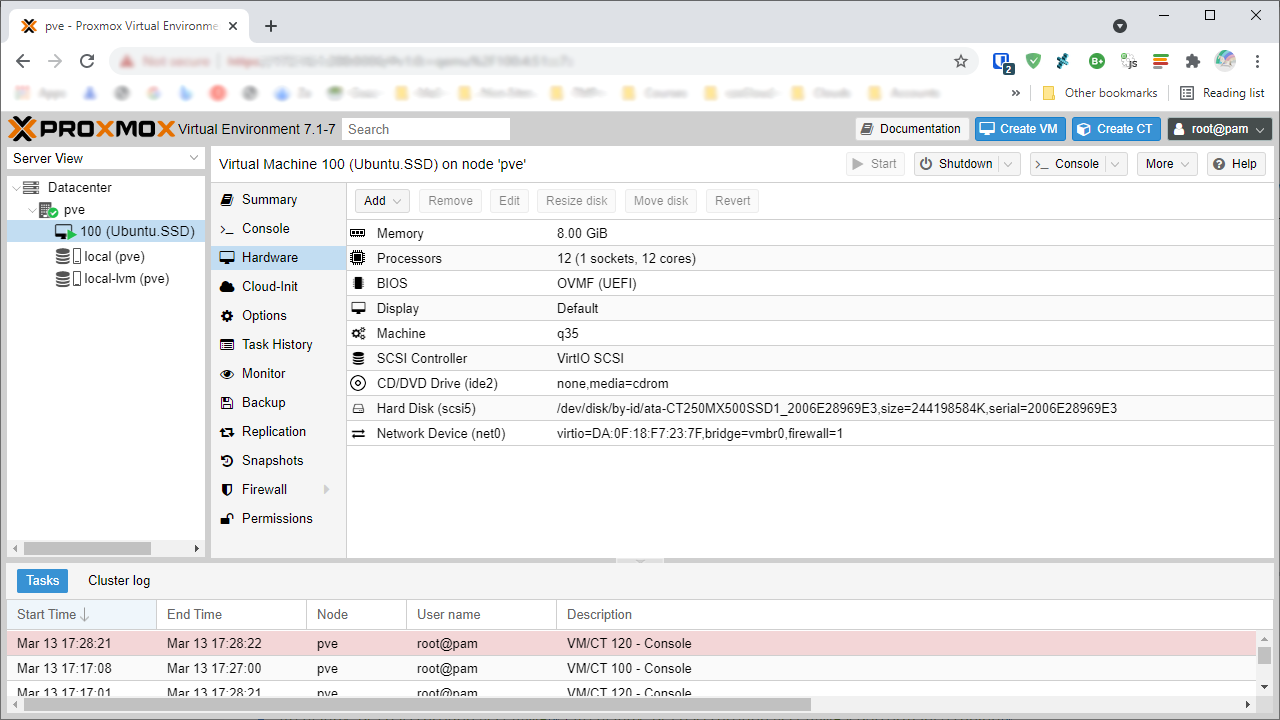

Initially a Proxmox VM with an Id:100 was created by the GUI interfaces the final result of this operation is p shown at Figure 1.

Attach the Physical Drives to the Proxmox VM

The physical drives – 1xSSD and 3xHDD – will be attached to the VM by their Ids. Also the serial numbers of the devices will be passed to the VM. The following command should be executed on the Proxmox host's console. It ill provide the necessary information for the next step.

lsblk -d -o NAME,SIZE,SERIAL -b | grep -E '^(sdb|sde|sdf|sdg)' | \

awk '{print $3}' | xargs -I{} find /dev/disk/by-id/ -name '*{}' | \

awk -F'[-_]' \

'{if($0~"SSD"){opt=",discard=on,ssd=1"}else{opt=""} {printf "%s\t%s \t \t%s\n",$(NF),$0,opt}}'

2006E28969E3 /dev/disk/by-id/ata-CT250MX500SSD1_2006E28969E3 ,discard=on,ssd=1

WCC4M1YCNLUP /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP

WCC4M3SYTXLJ /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ

JP1572JE180URK /dev/disk/by-id/ata-Hitachi_HDS721050CLA662_JP1572JE180URK

# Ver.2 of the inline script:

QM=3015 IDX=10 TYPE="sata" DEVICES="(sda|sdb|sdc|sdf)"

lsblk -d -o NAME,SIZE,SERIAL -b | \

grep -E "^${DEVICES}" | \

awk '{print $3}' | \

xargs -I{} find /dev/disk/by-id/ -name '*{}' | \

awk -F'[_]' -v qm=$QM -v idx=$IDX -v type=$TYPE \

'{if($0~"SSD"){opt=",discard=on,ssd=1"}else{opt=""} {printf "qm set %d -%s%d %s,serial=%s%s\n",qm,type,NR-1+idx,$0,$(NF),opt}}'

qm set 3015 -sata10 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ,serial=WD-WCC4M3SYTXLJ,

qm set 3015 -sata11 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP,serial=WD-WCC4M1YCNLUP,

qm set 3015 -sata12 /dev/disk/by-id/ata-TOSHIBA_DT01ACA200_Y4BY48MAS,serial=Y4BY48MAS,

qm set 3015 -sata13 /dev/disk/by-id/ata-SSDPR-CX400-256_GV2024436,serial=GV2024436,discard=on,ssd=1

- Note: In the both cases of the inline script the auto detecting of the options

discard=on,ssd=1is a fake solution.

In the command above (sdb|sde|sdf|sdg) are the names of the four devices at the host's side. The output is in the same order. The first column of the output contains the serial numbers of the devices, the second column their Ids and the third column indicates whether the device is SSD.

The next four commands will attach these devices to the virtual machine with Id:100, within these commands is used the second column of the above output.

qm set 100 -scsi0 /dev/disk/by-id/ata-CT250MX500SSD1_2006E28969E3

qm set 100 -scsi1 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP

qm set 100 -scsi2 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ

qm set 100 -scsi3 /dev/disk/by-id/ata-Hitachi_HDS721050CLA662_JP1572JE180URK

Tweak the Proxmox VM

Next, some additional tweaks are made directly at the configuration file of the virtual machine with Id:100. The most notable changes are at the highlighted lines where are added entries for the serial numbers of the devices – i.e. ,serial=JP1572JE180URK.

nano /etc/pve/qemu-server/100.conf

agent: 1

balloon: 4096

bios: ovmf

boot: order=scsi0

cores: 8

cpu: kvm64

machine: q35

memory: 12288

meta: creation-qemu=6.1.0,ctime=1647164728

name: Ubuntu.SSD

net0: virtio=DA:0F:18:F7:23:7F,bridge=vmbr0,firewall=1

numa: 1

onboot: 1

ostype: l26

scsi0: /dev/disk/by-id/ata-CT250MX500SSD1_2006E28969E3,discard=on,size=244198584K,ssd=1,serial=2006E28969E3

scsi1: /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP,size=1953514584K,serial=WCC4M1YCNLUP

scsi2: /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ,size=1953514584K,serial=WCC4M3SYTXLJ

scsi3: /dev/disk/by-id/ata-Hitachi_HDS721050CLA662_JP1572JE180URK,size=488386584K,serial=JP1572JE180URK

scsihw: virtio-scsi-pci

shares: 1001

smbios1: uuid=70dc4258-04d7-4255-bec8-0c7d0cd9a0c1

sockets: 2

vcpus: 16

vmgenid: 39632f8b-b9d2-4263-87c3-f6ef0814ab71

Run the Proxmox VM

Our VM with Id:100 is ready to be started, this could be done either by the GUI interface or by the command line.

qm start 100

In order to perform force stop of the VM from the command line one can do the follow. Usually when the "guest tools" are installed we do not to remove the lock file.

rm /var/lock/qemu-server/lock-100.conf

qm stop 100

Setup the Guest OS Ubuntu Server

Once the guest OS is running, the very first step is to install the QEMU/KVM guest tools.

sudo apt update

sudo apt install qemu-guest-agent

sudo apt install spice-vdagent

The next step is to reconfigure the network, because the network adapter is changed from a physical one to a virtual one and it is not configured. You may use the command ip a to find the adapters name. Then update the network configuration and apply the new settings or reboot the guest system entirely. In my case I've done the follow.

sudo nano /etc/netplan/00-installer-config.yaml

network:

version: 2

ethernets:

enp6s18:

dhcp4: false

dhcp6: false

addresses: [172.16.1.100/24]

gateway4: 172.16.1.1

nameservers:

addresses: [172.16.1.1, 8.8.8.8, 8.8.4.4]

sudo netplan try

sudo netplan apply

sudo netplan –d apply # in order to debug a problem

sudo systemctl restart systemd-networkd.service networking.service

The next step is to check whether the attached HDDs are recognized correctly. In my case the partitions are mounted by uuid and everything was fine.

lsblk -o NAME,SERIAL,UUID | grep '^.*sd.'

sda 2006E28969E3

├─sda1 84D2-3327

└─sda2 09e7c8ed-fb55-4a44-8be4-18b1696fc714

sdb JP1572JE180URK

├─sdb1 A793-2FD3

└─sdb2 b6ec78e6-bf2e-4cf0-bb1e-0042645af13b

sdc WCC4M3SYTXLJ

└─sdc1 9aa5e7bb-a0ca-463c-856b-86a3b9b4944a

sdd WCC4M1YCNLUP

└─sdd1 5d0b4c70-8936-41ef-8ac5-eba6f3142d9d

sudo nano /etc/fstab

/dev/disk/by-uuid/84D2-3327 /boot/efi vfat defaults 0 0

/dev/disk/by-uuid/09e7c8ed-fb55-4a44-8be4-18b1696fc714 / ext4 discard,async,noatime,nodiratime,errors=remount-ro 0 1

/dev/disk/by-uuid/9aa5e7bb-a0ca-463c-856b-86a3b9b4944a /mnt/2TB-1 auto nosuid,nodev,nofail 0 0

/dev/disk/by-uuid/5d0b4c70-8936-41ef-8ac5-eba6f3142d9d /mnt/2TB-2 auto nosuid,nodev,nofail 0 0

/dev/disk/by-uuid/b6ec78e6-bf2e-4cf0-bb1e-0042645af13b /mnt/500GB auto nosuid,nodev,nofail 0 0

/swap.4G.img none swap sw 0 0

Finally I've removed the IOMMU which is not longer needed at this instance.

sudo nano /etc/default/grub

#GRUB_CMDLINE_LINUX_DEFAULT="intel_iommu=on iommu=pt kvm.ignore_msrs=1 kvm.report_ignored_msrs=0 irqpoll vfio-pci.ids=10de:1021"

GRUB_CMDLINE_LINUX=""

sudo nano /etc/modprobe.d/blacklist.conf

# Blacklist NVIDIA Modules: 'lsmod', 'dmesg' and 'lspci -nnv'

#blacklist nvidiafb

#blacklist nouveau

#blacklist nvidia_drm

#blacklist nvidia

#blacklist rivatv

#blacklist snd_hda_intel

In addition you can inspect the system logs for any errors or unnecessary modules – like drivers Wi-Fi adapters and so on.

cat /var/log/syslog | grep -Pi 'failed|error'

sudo journalctl -xe

Part 2: Convert the Physical Installation to a ProxmoxVE Image

In this section is used the migration approach "Clonezilla Live CD" described in the ProxmoxVE documentation (see the references below). All performed steps are shown at Video 1 and the essential parts of them are explained within the sub-sections below the video. The migration will be done from VM with Id:100 to a VM with Id:201.

Prepare the Source OS for Migration

In this step the swap file of the source installation of Ubuntu Server 20.04 is shrunk. Also some unnecessary services are removed. Finally, as it is shown at Video 1[0:15] is created a backup copy of the entire source SSD by the help of Clonezilla running on a dedicated virtual machine where are attached the SSD along with one additional HDD to store the backup.

Shrink the Source Partition before the migration

For this task is used the tool gparted on another virtual machine running Ubuntu Desktop – Video 1[0:45]. You can use live session of Ubuntu Desktop instead. Again, the SSD is attached to the VM as it is shown in the previous section.

Test Whether the Source Instance is Operational

Power-up the virtual machine with Id:100 and check whether everything works fine after the shrinking of the main partition – Video 1[3:30].

Prepare an Existing Drive as New LVM Pool

Actually this step is not part of the migration but it wasn't done in advance, so I've decided to include it here – Video 1[4:00]. So our new VM's image will be created on local-lvm-CX400.

Create the Destination Virtual Machine

In this step is created a new virtual machine with Id:201 that will be used further – Video 1[4:55]. The (image) drive of this VM is the destination point of the transfer that will be performed in the next steps. An important thing is – the new (virtual) disk should be larger than the partition shrank in the step above.

Note the new VM Id:201 uses SeaBIOS while the source VM Id:100 is running on UEFI mode, so after the partition transfer we will need to do boot repair in order to run the OS in BIOS mode. The final configuration file of the new VM with Id:201 is shown below – some of the listed options are not configured yet.

nano /etc/pve/qemu-server/201.conf

agent: 1,fstrim_cloned_disks=1

boot: order=scsi0

cipassword: user$password

ciuser: user

cores: 8

cpu: kvm64,flags=+pcid

ide0: local-lvm-cx400:vm-201-cloudinit,media=cdrom

ipconfig0: ip=172.16.1.100/24,gw=172.16.1.1

machine: q35

memory: 12288

meta: creation-qemu=6.1.0,ctime=1647864994

name: Ubuntu.Server

net0: virtio=E2:88:AC:E7:FD:C2,bridge=vmbr0,firewall=1

numa: 1

onboot: 1

ostype: l26

scsi0: local-lvm-cx400:vm-201-disk-0,discard=on,iothread=1,size=84G,ssd=1

scsi1: /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP,size=1953514584K,serial=WCC4M1YCNLUP

scsi2: /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ,size=1953514584K,serial=WCC4M3SYTXLJ

scsi3: /dev/disk/by-id/ata-Hitachi_HDS721050CLA662_JP1572JE180URK,size=488386584K,serial=JP1572JE180URK

scsihw: virtio-scsi-single

serial0: socket

smbios1: uuid=21f444b4-0222-402c-9b88-36h397021aa4

sockets: 2

sshkeys: ssh-rsa...

vmgenid: a9183f95-00a4-40be-8bad-59ed6164d144

Install Ubuntu Server as Placeholder

Install Ubuntu Server at the new VM with Id:201 as placeholder – use the entire disk, and don't use the LVM option – Video 1[6:45].

Setup Clonezilla for the Destination VM

This step is where the actual transfer setup begin – Video 1[8:15]. For this purpose the Clonezilla's ISO image is attached to the (virtual) CD drive of VM Id:201 and it is marked as primary boot device.

Within Clonezilla's wizard are chosen the following options:

Choose Language>Keep the default keyboard layout>Start Clonezilla>remote-dest– Enter destination mode of remote device cloning>dhcp– after that Clonezilla will ask you for theremote-sourceVMId:100server IP address.

Setup Clonezilla for the Source VM

At the source VM Id:100 we should load the Clonezilla's ISO image as CD drive too and se it as boot drive. Also we need to switch to SeaBIOS in order to boot the Clonezilla's image with no additional headaches. Then we can run the VM – Video 1[9:35].

Within Clonezilla's wizard are chosen the following options:

Choose Language>Keep the default keyboard layout>Start Clonezilla>remote-source– Enter source mode of remote device cloning>Beginner>part_to_remote_part– Local partition to remote partition clone>dhcp>then choice the partition shrank in the step above –sdd2>-fsck>-p>- Once the IP address of the source instance is obtained we must go back to the

remote-destinationinstance VMId:201.

Start the Remote Source-Destination Transfer

Go back to the remote-destination instance VM Id:201 – Video 1[12:15] and complete the wizard:

- Enter the IP address of the source server

> restoreparts– Restore an image onto a disk partition>- Choose the partition – in this case it is

sda2>then hit Enter and confirm the Warning! questions.

Shutdown the Virtual Machines

The transfer will take few minutes after that you have to shutdown the virtual machines – Video 1[13:15].

Inspect the Size of the New Drive

Us the ProxmoxVE GUI and check does everything looks correct – Video 1[13:25]. The new image's size will be smaller than the given virtual disk's size this is because we are using LVM-Thin.

Repair the Boot loader: Part 1

In this step is used Ubuntu Desktop live session in order Grub – this is because we are migrating the OS from UEFI to BIOS mode – Video 1[13:55]. However this step and the next step too could be simplified and made within the Clonezilla's session…

In this approach is used the tool boot-repair (check the references), so within the live session it is installed by the following commands:

sudo parted -l # test GPT

sudo add-apt-repository ppa:yannubuntu/boot-repair

sudo apt update

sudo apt install -y boot-repair

boot-repair

Repair the Boot loader: Part 2

It is time to boot the new VM Id:201 by the already cloned OS. Remove the CD images and set the right boot order of the VM – Video 1[18:15]. First we will go into the Recovery mode – if the Grub menu is not enabled, hit the Esc button at the beginning of the boot process. Choose the option Drop to root shell prompt and use the following commands to tweak the Grub options and finally power-off the VM.

sudo nano /etc/default/grub

sudo update-grub

sudo poweroff

Final: Attach the Physical Hard Drives

In this step are used the same commands as in the above section – but we will attach only the HDDs because the OS is already migrated to ProxmoxVE image – Video 1[21:25].

qm set 201 -scsi1 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M1YCNLUP

qm set 201 -scsi2 /dev/disk/by-id/ata-WDC_WD20PURZ-85GU6Y0_WD-WCC4M3SYTXLJ

qm set 201 -scsi3 /dev/disk/by-id/ata-Hitachi_HDS721050CLA662_JP1572JE180URK

Now we can boot the VM as normal and test whether everything works fine :)

References

- LearnLinuxTV: Proxmox VE Full Course: Class 5 – Launching a Virtual Machine

- Proxmox HHS: Proxmox VE 6.0 Beginner Tutorial – Installing Proxmox & Creating a virtual machine.

- ProxmoxVE Wiki: Passthrough Physical Disk to Virtual Machine (VM)

- ProxmoxVE Wiki: Migration of servers to Proxmox VE (Clonezilla Live CDs)

- SyncBricks: Move Physical Servers and Virtual Machines to Proxmox VE 6.3 – Using Clonezilla

- Clonezilla: Downloads

- ProxmoxVE Documentation at GitHub: Qemu/KVM Virtual Machines (All options within the GUI interface explained)

- BLOG‑D Without Nonsense: How to Passthrough HDD/SSD/Physical disks to VM on Proxmox VE

- VITUX Linux Compendium: How to configure networking with Netplan on Ubuntu

- Average Linux User: How to shrink a Linux partition without losing data?

- LinuxConfig.org: How to increase TTY console resolution on Ubuntu 18.04 Server

- LinuxConfig.org: How to change TTY console font size on Ubuntu 18.04 Server

- Ask Ubuntu: Resize font on boot message screen and console

- Nezhar.com: Fix for Ubuntu 19.10 stuck on splash screen

- NewBeDev.com: Convert from EFI to BIOS boot mode

- Ask Ubuntu: Convert from EFI to BIOS boot mode

- Ubuntu Documentation: Boot-Repair